Let's have some fun with a look at some realistic Google Cloud Professional ML Engineer exam questions.

If you want more practice like this, check out this link.

Okay, let’s begin.

Question 1: Comparing models

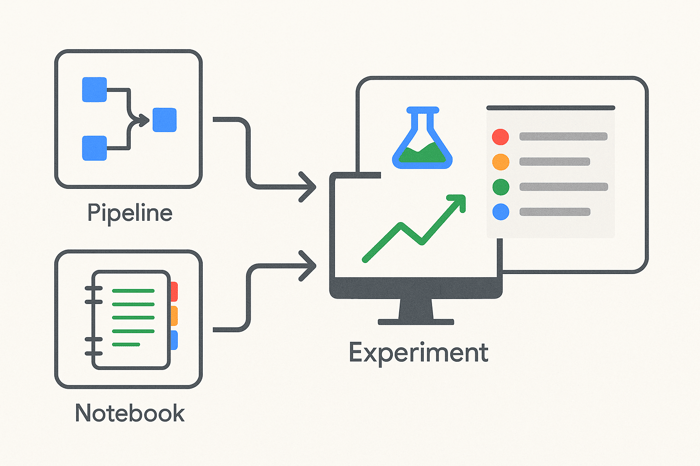

Your company builds machine learning models to forecast product demand in different regions. Some models are developed using Vertex AI Workbench notebooks, while others are built into Vertex AI Pipelines. You need a simple, centralized way to compare model versions, hyperparameters, and evaluation results across both environments, while minimizing the extra coding work required.

Choose only ONE best answer.

A) Set up a Cloud Storage bucket to manually upload model parameters and evaluation metrics from notebooks and pipelines after each training run.

B) Use the Vertex AI Metadata API to write all model inputs and outputs, and build a custom dashboard to track comparisons.

C) Create a Vertex AI experiment. Track pipeline runs automatically as experiment runs, and use the Vertex AI SDK in notebooks to log parameters and metrics.

D) Move all model development into Vertex AI Pipelines, and manually add steps in each pipeline to save the training results into a BigQuery table.

Analysis

Take a moment to think through this question. Let's analyze each option:

Option A talks about manually uploading parameters and metrics to a Cloud Storage bucket. I mean, you could do that — it's simple enough to set up. But then, how are you going to organize all that data across different models and environments? Would you need to build extra tools just to make sense of it later?

Option B brings up the Vertex AI Metadata API. It’s definitely designed to track information about models, which sounds useful. But does it really give you an easy way to group and compare runs directly? Or would you have to create your own system to make those comparisons work?

Option C suggests creating a Vertex AI experiment and using the Vertex AI SDK to log runs from both pipelines and notebooks. On the surface, that sounds pretty streamlined. Still, I wonder — would setting up experiments add much overhead for the team, or does it actually end up saving effort compared to building something custom?

Option D mentions reworking everything into pipelines and sending the results to BigQuery. Sure, BigQuery is great for querying large datasets, but is it worth the trouble of rewriting your existing notebook workflows just to get the metrics into a table? And would that really help you compare models easily, or just store their outputs?

The critical requirement is minimizing effort while still being able to easily compare and track models across both notebooks and pipelines.

So, the answer:

C) Create a Vertex AI experiment. Track pipeline runs automatically as experiment runs, and use the Vertex AI SDK in notebooks to log parameters and metrics.

Why?

Vertex AI Experiments provides an integrated way to track, compare, and visualize models trained in different environments. By linking both pipelines and notebooks into a single experiment using the Vertex AI SDK, you get organized tracking with minimal extra coding. It fits naturally into both workflows.

Why the other answers are wrong:

A) Manually uploading to Cloud Storage is tedious and doesn’t offer built-in experiment management.

B) The Vertex AI Metadata API helps store details, but you would need to build your own system for comparisons and visualizations.

D) Forcing everything into pipelines and exporting to BigQuery increases work and removes the flexibility of using notebooks easily.

Vertex AI Experiments is definitely something that would show up on the Professional ML Engineer exam. Let’s try another one:

Question 2: Reduce Overfitting

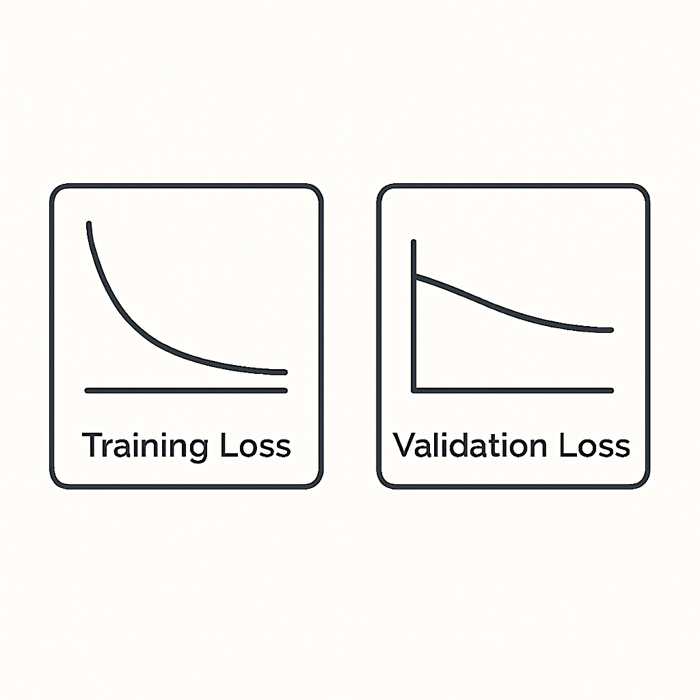

Your team is developing a customer churn prediction model using TensorFlow on Vertex AI Workbench. You have split your dataset into training, validation, and test sets, all with similar distributions. After training a dense neural network, you notice in TensorBoard that the training loss has stabilized around 1.0, but the validation loss remains about 0.4 higher. You want to adjust your training approach to bring the two losses closer together and reduce the risk of overfitting.f

Choose only ONE best answer.

A) Decrease the learning rate to stabilize training and allow the model to converge better.

B) Add L2 regularization to the model to address the validation loss gap and limit overfitting.

C) Increase the batch size to make optimization smoother and help the model generalize better.

D) Add more hidden layers to the network to capture more complex patterns and improve validation loss.

Analysis

Take a moment to think about this problem. We need a solution that:

Brings the training and validation losses closer together

Reduces overfitting

Does not simply slow down or complicate training

Let's analyze each option:

Option A talks about decreasing the learning rate. Slowing things down could help the model converge more smoothly, but if the problem is overfitting — and it looks like it is, given that validation loss is much higher — would just changing the learning rate really solve that? Or would the model just overfit more slowly?

Option B brings up adding L2 regularization. That’s interesting, because L2 actually pushes the model toward simpler weight values. Simpler patterns usually mean better generalization, which is exactly what you want if validation loss is too high compared to training loss. Maybe this tackles the root cause instead of just the symptoms.

Option C suggests increasing the batch size. Bigger batches usually make optimization smoother, sure. But when it comes to overfitting, is batch size really the lever you'd want to pull first? It might help a little—or it might do nothing, or even make it harder for the model to generalize.

Option D mentions adding more hidden layers. It sounds tempting if you’re thinking, “Maybe the model just isn’t powerful enough.” But wait — if the model is already overfitting, making it even bigger probably won’t help. It might just memorize the training data even more.

The key requirement is reducing overfitting and helping the model generalize better to validation data.

So the answer is:

B) Add L2 regularization to the model to address the validation loss gap and limit overfitting.

Adding L2 regularization provides the optimal solution for reducing overfitting and improving the generalization of your neural network. L2 regularization works by penalizing large weight values during training, encouraging the model to learn simpler, more robust patterns instead of memorizing the training data. This directly addresses the gap between training and validation losses by limiting model complexity in a controlled way. Applying L2 regularization helps the model perform better on unseen data, bringing the training and validation losses closer together without fundamentally changing the learning rate, network size, or training process.

Why are the other answers wrong?

A) Decreasing the learning rate improves stability but doesn't address the overfitting causing the validation loss gap.

C) Increasing batch size affects optimization speed but not overfitting directly.

D) Adding more hidden layers increases model complexity, making overfitting worse rather than better.

Reducing overfitting is a classic challenge that is highly likely to be tested on the Professional ML Engineer certification exam.

One more for today:

Question 3: Vertex AI Endpoint Error

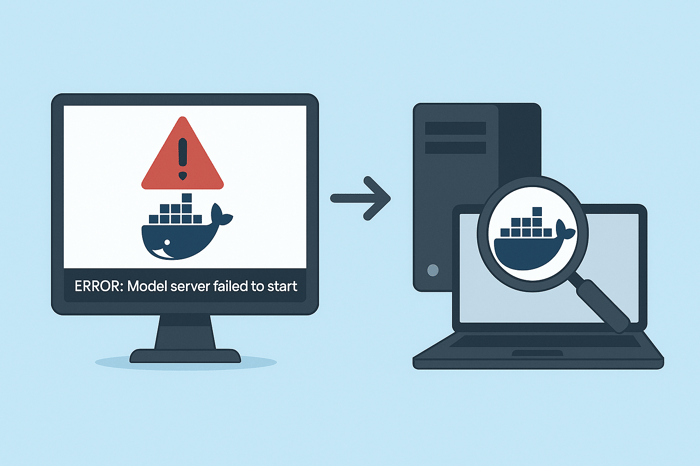

You're working for a retail company that built a custom recommendation model packaged inside a Docker container. You deployed the model to a Vertex AI endpoint for online prediction. However, when sending prediction requests, the endpoint stays unavailable, and the error logs show that the model server never became ready. Vertex AI logs do not provide detailed startup errors. You need to diagnose the issue and restore the serving endpoint.

Choose only ONE best answer.

A) Rebuild the container with a different base image that matches one of Vertex AI’s prebuilt containers.

B) Download the Docker image locally and run it with docker run. Use docker logs to inspect any startup errors inside the container.

C) Modify the container’s start command to add a health check script that pings the model server.

D) Enable debug logging inside Vertex AI Model Monitoring to capture detailed logs during container startup.

Analysis

Take a moment to think about this situation. We need to:

Diagnose why the container isn’t starting properly

Get access to detailed error logs

Minimize guesswork when fixing the issue

Let's analyze each option:

Option A brings up rebuilding the container with a different base image. That might help if the base image is the problem - but right now, you don’t even know what’s wrong. Rebuilding feels like shooting in the dark - and you could easily introduce new issues without solving the original one.

Option B says to download and run the Docker image locally. That sounds more grounded — if you run it yourself, you can actually see what’s happening when it tries to start. No guesswork, no waiting on Vertex AI logs that might not tell you much. It’s probably the fastest way to get real information about why the container won’t come up.

Option C talks about adding a health check script. Health checks are great if the server is running and you just want to monitor its status... but if the server can’t even start? Not really going to help at that point.

Option D suggests enabling debug logging in Model Monitoring. Debug logs are definitely useful — once the model is serving. But here, the container never even gets to that stage. So would debug logs actually show anything useful if the server isn't even alive yet?

Remember, the key requirement is gaining detailed visibility into why the container isn’t starting.

So the answer is:

B) Download the Docker image locally and run it with docker run. Use docker logs to inspect any startup errors inside the container.

Running the Docker image locally provides the optimal way to diagnose container startup issues. By using docker run and docker logs, you gain full visibility into any errors that prevent the model server from becoming ready, such as missing files, incorrect configurations, or dependency problems. This direct approach isolates the environment from Vertex AI’s managed infrastructure, allowing you to troubleshoot precisely and efficiently. It ensures you can identify and fix the root cause before redeploying the model, rather than making speculative changes that could introduce new problems.

Why the other choices are wrong:

A) Rebuilding with a different base image without diagnosing the problem is risky and could introduce new issues.

C) Health checks assume the server starts, but here the container doesn’t start at all.

D) Model Monitoring logs only appear after the endpoint is live; they won’t capture container startup failures.

Although you might think that using Docker images is not that relevant to being a machine learning engineer, it's a foundational skill that greatly improves your ability to use tools like Vertex AI, and has a good chance of showing up on the Google Cloud Professional ML Engineer exam.

I hope you found this exercise valuable. The questions on the real Professional ML Engineer exam can be pretty tough, but with the right course and quality explanations you can tackle them and learn immensely in the process.

There are many more example questions like this in my full Professional ML Engineer course.