Understanding Watermarks in Dataflow: What They Are and Why They Matter

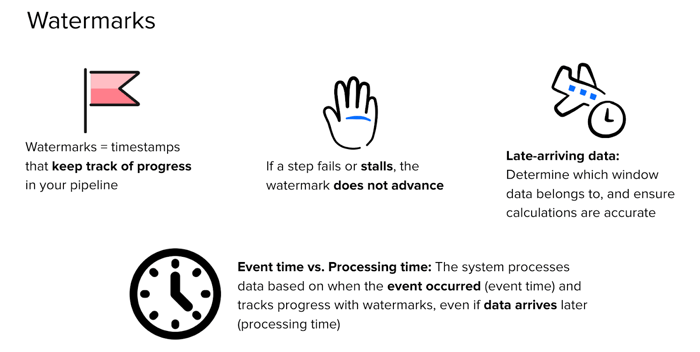

Watermarks are one of the most important concepts to understand when working with Dataflow, both in real-world streaming pipelines and on GCP certification exams. In Dataflow, watermarks act like timestamps that track how far a pipeline has progressed through the data. You can think of a watermark as a moving checkpoint—it tells the system, “We’ve processed everything up to this point.” As more events arrive and are processed, the watermark moves forward.

This mechanism helps Dataflow manage windowed operations accurately. For instance, if you're calculating aggregates over five-minute intervals, the watermark ensures that all the relevant data for that window is processed before the output is emitted.

But what happens when something in the pipeline stalls? If a step fails or slows down, the watermark in Dataflow doesn’t move forward. Instead, it waits. This is intentional—it prevents the system from skipping ahead and missing important data. The watermark acts like a stop sign, helping Dataflow avoid incomplete results.

Late-arriving data is another common scenario, especially in distributed systems. Just like a delayed flight still lands, data can arrive after its expected time. As long as the event's timestamp is behind the current watermark, Dataflow can still accept and process it. This gives the pipeline flexibility to integrate stragglers without compromising accuracy.

This ties into an important distinction: event time versus processing time. Event time is when the action actually occurred, such as a user click or a sensor reading. Processing time is when the data arrives at the system. Dataflow uses event time (not processing time) to drive watermarks, ensuring that data is handled in a way that reflects when things truly happened, not just when they were received.

In short, watermarks help Dataflow stay aligned with the real sequence of events, allowing for accurate, consistent stream processing—even when data shows up late or out of order.

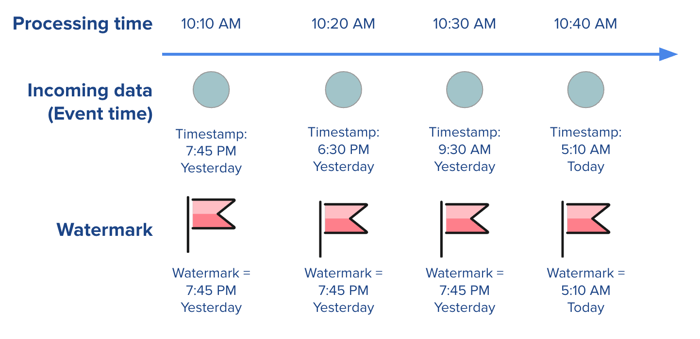

A Visual Walkthrough of How Watermarks Work

To really understand how watermarks operate in Dataflow, let’s walk through the example shown in the image above.

The top line represents processing time—that is, the actual clock time when the system receives each piece of data. Below that, you’ll see the event time, which is the timestamp attached to each data point indicating when the event actually occurred. And at the bottom, we track how the watermark moves (or doesn’t move) over time.

At 10:10 AM, the system receives a data point with an event timestamp of 7:45 PM yesterday. Since this is the most recent event timestamp seen so far, the system sets the watermark to that value. This means, “We’ve now processed all events up to 7:45 PM yesterday.”

But things get more interesting as new data arrives.

At 10:20 AM, the system receives another data point—this time, with an event timestamp of 6:30 PM yesterday. Even though it arrives later in real time, it happened earlier in event time. Since it's older than the current watermark, the system holds the watermark steady at 7:45 PM yesterday. It won’t advance until it’s sure no more earlier events are coming.

Then, at 10:30 AM, another piece of data comes in. Its timestamp? 9:30 AM yesterday. Once again, we’re seeing late data. The system still can’t advance the watermark—it continues to wait at 7:45 PM yesterday to ensure everything before that point has been captured.

Finally, at 10:40 AM, a new event arrives with a timestamp of 5:10 AM today. This is the first event timestamp later than the current watermark. At this point, the system can confidently move the watermark forward to 5:10 AM today.

This example highlights the core idea behind watermarks: they help Dataflow manage out-of-order data by holding back progress until it’s safe to move on. The watermark doesn’t care when data arrives; it cares when the event happened. That’s how Dataflow ensures accuracy and order, even in the presence of delays.

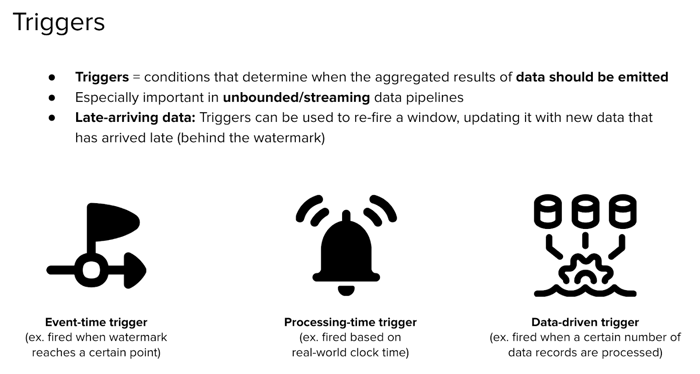

Understanding Triggers in Streaming Pipelines

In streaming data pipelines—especially unbounded ones where data flows in continuously—you need clear rules for when to emit results. That’s where triggers come in.

Triggers are the conditions that determine when a pipeline should output aggregated results, like the total from a time window. This is a core part of how stream processing works in systems like Apache Beam or Dataflow. Without them, you'd either emit too early and miss data, or emit too late and delay insights.

Now, one key challenge in streaming pipelines is late-arriving data—data that shows up after the watermark has already passed. But late data doesn’t have to be discarded. Triggers can be configured to re-fire a window, letting that late data update the final result. This helps maintain both accuracy and completeness, even when data doesn’t arrive on time. Of course, this only works if the pipeline is set up with allowed lateness to accept data behind the watermark.

There are several types of triggers, but the three most common ones are illustrated in the image above:

Event-time trigger: This one fires when the watermark reaches a certain point in time. It’s the default for many pipelines because it aligns with the actual event timestamps and ensures windows are emitted only once we’re confident that all the data for that time range has been received.

Processing-time trigger: This type of trigger is based on real-world clock time. Instead of watching event timestamps or watermarks, it looks at when the data is physically being processed. For example, you might set it to fire every 5 minutes, regardless of the events’ timestamps.

Data-driven trigger: This trigger has nothing to do with time. Instead, it fires when some condition related to the data itself is met—like when 100 records are received, or when data hits a certain size in bytes. These triggers are great for use cases where volume or content, not timing, should determine when results are emitted.

Each of these triggers gives you different ways to control the behavior of your pipeline. Whether you care about completeness (event time), responsiveness (processing time), or throughput (data-driven), triggers let you tailor your stream output logic to your use case.

Learn More

If you're interested in learning more about the latest cloud technologies and approaches, check out my courses: