Google Cloud announced the Generative AI Leader certification on May 14, 2025.

This foundational-level certification sits alongside the Cloud Digital Leader certification in terms of difficulty, targeting business-focused, rather than technical, people.

Let's understand in more detail what the Generative AI Leader certification tests and what it reveals about Google's idea of the role.

If you want to actually prepare for the Generative AI Leader certification exam, you can sign up for my course.

What The Generative AI Leader Certification Actually Is

According to Google's own description of the exam, the Generative AI Leader certification attempts to identify "visionary professionals with comprehensive knowledge of how generative AI can transform and be used within a business."

It's important to note here that the emphasis is one business transformation, not technical implementation. You won't be tested on the technical details of generative AI models, Vertex AI, or GCP. The GCP exam that covers those technical topics is the Professional Machine Learning Engineer exam.

In contrast, with the Generative AI Leader certification, you'll be tested on your ability to engage in meaningful conversation about the technology and translate that to the business.

This is a foundational-level certification, which is different from the associate level and professional level GCP certifications.

The fact that it's a foundational certification means that it is focused more on breadth of knowledge and on bridging the gap for business leaders. It requires you to understand what the options are and which tools to use, rather than deep technical expertise about how to use them.

Exam Guide Section 1: Fundamentals of Gen AI (30% of the exam)

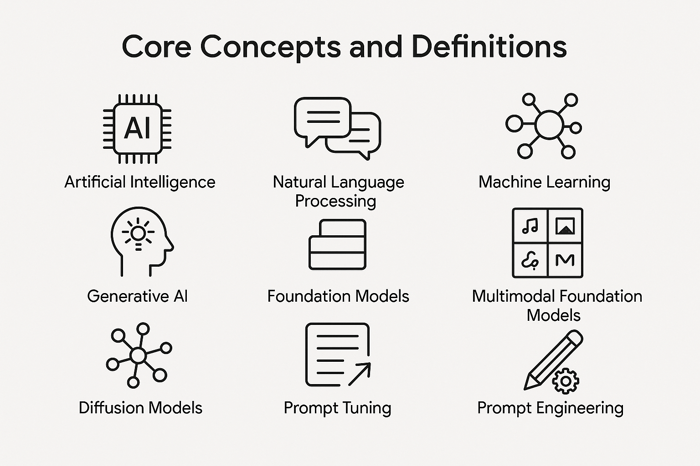

Core Concepts and Definitions

This section is focused on theory and understanding of AI concepts rather than actual GCP tools. According to the exam guide, you'll need to define "artificial intelligence, natural language processing, machine learning, generative AI, foundation models, multimodal foundation models, diffusion models, prompt tuning, prompt engineering, large language models."

You'll likely need to understand how these concepts relate to each other and to business applications, not just memorize their definitions. For instance, knowing that foundation models are pre-trained on massive datasets and can be adapted for multiple tasks, while diffusion models specifically generate images through iterative denoising processes.

Machine Learning Approaches and Lifecycle

You need to have a cursory understanding of the ML lifecycle stages. The exam guide specifically mentions "data ingestion, data preparation, model training, model deployment, and model management" as key stages you must understand, along with "the Google Cloud tools for each stage."

In the data ingestion and collection phase, we gather data from various sources, such as databases, files, or APIs. The goal here is to collect relevant, quality data that will be used to train our models.

Next is data preparation and processing. Raw data often needs cleaning and transformation. In this stage, we handle tasks like removing duplicates, normalizing values, and preparing the data for modeling. This is a crucial step, as the quality of the processed data directly impacts model performance.

During model training, we divide the dataset into two parts: one for training the model and another for testing its performance. Typically, around 70-80% of the data is used for training, while 20-30% is reserved for testing. We feed the training data into our machine learning algorithm, allowing the model to learn patterns. During this stage, we might also validate the model to fine-tune parameters and improve accuracy.

After training, you would typically perform model evaluation. Using the test data, we assess the model’s performance. Metrics like accuracy, precision, recall, or others depending on the use case, help us determine if the model is ready for deployment.

Finally, once the model is evaluated, we move to model deployment and monitoring. The trained model is deployed into a production environment where it can make predictions on new data. Post-deployment, we continuously monitor the model to ensure it performs well as data evolves.

The Generative AI Leader certification expects you to have a cursory understanding of these stages. But again, you definitely won't need to know the technical details like you would if you were preparing for the Professional Data Engineer or Professional ML Engineer exams.

Choosing Foundation Models

Foundation models are basically the pre-trained AI models that serve as starting points for various applications. The exam seems to test whether you can evaluate and select appropriate models based on what a business actually needs. The guide specifically mentions identifying "how to choose the appropriate foundation model for a business use case" and lists several factors: "modality, context window, security, availability and reliability, cost, performance, fine-tuning, and customization."

You'll probably need to understand what each factor means in practice. Modality is just the type of data the model handles, whether that be text, images, audio, or combinations of data. Context window is how much information the model can process at once, which matters for things like document analysis. Security considerations obviously matter for regulated industries. Availability and reliability affect whether you can actually use it in production. Cost impacts your budget. Performance determines speed and quality. Fine-tuning and customization options tell you how much you can adapt the model to your specific needs.

The exam likely expects you to match these characteristics to real business scenarios. For instance, a legal firm analyzing contracts probably needs a text model with a large context window and solid security features. A retail company building visual search would care more about image models with good performance. You should be able to make these connections without getting too deep into the technical weeds.

Business Use Cases

The Generative AI Leader certification seems to examine where gen AI creates value. According to the guide, you must identify "business use cases where gen AI can create, summarize, discover, and automate." These four verbs basically define what generative AI can do for businesses.

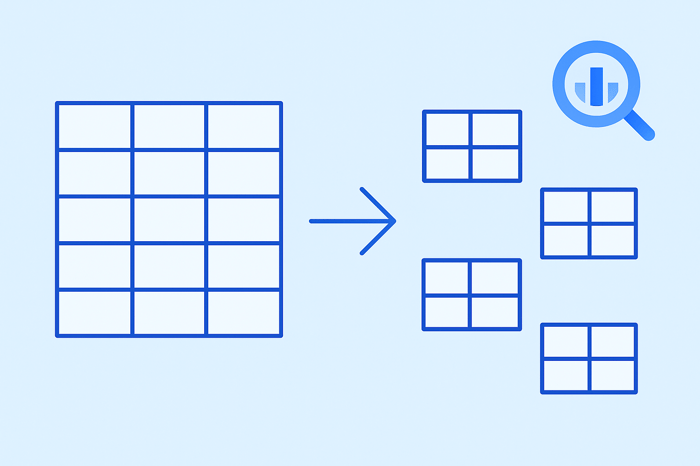

The exam also appears to test understanding of data fundamentals. You'll need to explain "the characteristics and importance of data quality and data accessibility in AI" including things like "completeness, consistency, relevance, availability, cost, format." Additionally, you must identify "the differences between structured and unstructured data" and "the differences between labeled and unlabeled data," with real-world examples of each. This is pretty basic stuff, but you should probably be able to explain why data quality matters for AI outcomes.

The Gen AI Landscape

The guide outlines five core layers of the gen AI landscape that candidates must understand:

Infrastructure, Models, Platforms, Agents, Applications

Each layer apparently builds on the previous one, from the computational foundation through to end-user solutions. Understanding these layers probably helps you frame where different Google products fit and how they work together.

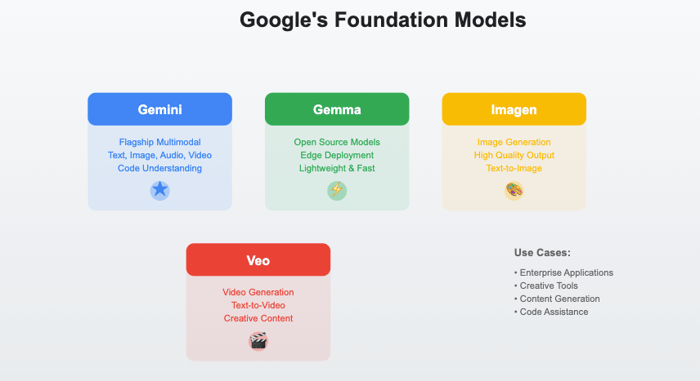

Google's Foundation Models

You'll likely need to know the strengths and use cases for Google's key models. The exam specifically tests knowledge of Gemini (Google's flagship multimodal model), Gemma (open-source models for edge deployment), Imagen (image generation), and Veo (video generation). Each has different capabilities and use cases you should probably understand at a high level.

Exam Guide Section 2: Google Cloud's Gen AI Offerings (35% of the exam)

This is the largest section, focusing on Google's specific products and advantages. You'll probably spend a lot of time here since it's worth over a third of the exam.

Google's Competitive Position

The exam seems to test whether you understand Google's strengths in the AI space. First, you need to describe "how Google's AI-first approach and commitment to future innovation translate into cutting-edge gen AI solutions."

The guide emphasizes that Google Cloud has "an enterprise-ready AI platform" with specific characteristics: "responsible, secure, private, reliable, scalable." Each of these words likely matters for the exam. You should probably be able to explain how Google delivers on each one.

Prebuilt Gen AI Offerings

The Generative AI Leader exam apparently tests detailed knowledge of Google's prebuilt solutions. For example, you must recognize "the functionality, use cases, and business value of the Gemini app and Gemini Advanced" including features like Gems. Similarly, for Google Agentspace, you need to understand "Cloud NotebookLM API, multimodal search, and custom agent capabilities."

For example, you should be able to articulate: Why would someone choose Gemini for Google Workspace? What problems does it solve? How does it fit into existing workflows? You'll likely need practical answers to these questions.

Customer Experience Solutions

The guide emphasizes several customer-facing technologies. You'll need to understand "Google Cloud's external search offerings" including both Vertex AI Search and Google Search integration. The distinction probably matters: Vertex AI Search is for enterprise search, while Google Search integration gives you web-scale information.

The Customer Engagement Suite seems important too. You'll need to know how Conversational Agents, Agent Assist, Conversational Insights, and Google Cloud Contact Center as a Service work together. Think about the complete customer journey, not just individual features.

Developer Empowerment

Even though the Generative AI Leader certification targets business leaders, you still need to understand how Google helps developers. The exam covers "Vertex AI Platform" including things like Model Garden, Vertex AI Search, and AutoML. You should also understand "Google Cloud's RAG offerings" which include "prebuilt RAG with Vertex AI Search, RAG APIs."

The guide specifically mentions understanding "the functionality, use cases, and business value of using Vertex AI Agent Builder to build custom agents." This suggests you'll need to know not just what these tools do, but when a company would actually invest in building custom agents versus using prebuilt solutions.

Agent Tooling

The exam apparently explores how agents interact with the world. According to the guide, you must identify "how agents use tools to interact with the external environment and achieve tasks" through extensions, functions, data stores, and plugins.

You'll probably need some familiarity with relevant Google Cloud services and pre-built AI APIs. The guide lists a bunch: "Cloud Storage, databases, Cloud Functions, Cloud Run, Vertex AI, Speech-to-Text API, Text-to-Speech API, Translation API, Document Translation API, Document AI API, Cloud Vision API, Cloud Video Intelligence API, Natural Language API, Google Cloud API Library." You probably don't need deep knowledge of each, but you should understand what they do.

Importantly, you must know "when to use Vertex AI Studio and Google AI Studio." These platforms serve different purposes, and choosing the right one apparently matters for the exam.

Exam Guide Section 3: Techniques to Improve Model Output (20% of the exam)

Overcoming Model Limitations

The exam seems to test whether you understand that AI models aren't perfect. You'll need to identify "common limitations of foundation models" including "data dependency, the knowledge cutoff, bias, fairness, hallucinations, edge cases." More importantly, you need to know how to address these issues.

The guide lists several approaches to fix model limitations:

grounding, retrieval-augmented generation [RAG], prompt engineering, fine-tuning, human in the loop [HITL]

Each technique probably addresses specific problems. Grounding connects models to real data. RAG pulls in relevant information to improve responses. Prompt engineering is basically writing better instructions. Fine-tuning adapts models to specific domains. HITL means having humans check the AI's work. You'll likely need to know when to use each approach.

Prompt Engineering Techniques

The exam apparently goes deep on prompt engineering as well. You need to understand what it is and why it matters for working with large language models. The exam tests knowledge of various techniques including zero-shot (no examples), one-shot (one example), few-shot (multiple examples), role prompting (giving the AI a persona), and prompt chaining (building on previous prompts).

There are also advanced techniques you'll probably need to know about. The guide mentions "chain-of-thought prompting, and ReAct prompting" as methods you should understand.

Grounding Techniques

Grounding seems particularly important. The exam tests whether you can describe "the concept of grounding in LLMs and differentiating between grounding with first-party enterprise data, third-party data, and world data." Each type serves different purposes. First-party data is your own company's information. Third-party is from partners. World data comes from web searches.

Google's grounding offerings include "Pre-built RAG with Vertex AI Search," "RAG APIs," and "Grounding with Google Search." You'll probably need to know when each makes sense. The guide also mentions understanding "how sampling parameters and settings are used to control the behavior of gen AI models" including things like token count, temperature, top-p, safety settings, and output length. These parameters basically control how creative or conservative the AI is.

Exam Guide Section 4: Business Strategies for Successful Gen AI Solutions (15% of the exam)

Implementation Steps

The exam apparently tests whether you can guide organizations through AI adoption. You need to recognize "different types of gen AI solutions" and identify "key factors that influence gen AI needs" including "business requirements, technical constraints."

You'll probably need to describe "how to choose the right gen AI solution for a specific business need" and identify "the steps to integrate gen AI into an organization." You also need to identify "techniques to measure the impact of gen AI initiatives." ROI matters, even for AI.

Security Considerations

Security seems to be a big deal throughout the exam. You need to explain "security throughout the ML lifecycle" and understand "the purpose and benefits of Google's Secure AI Framework (SAIF)." You'll need to recognize "Google Cloud security tools and their purpose" including "secure-by-design infrastructure, Identity and Access Management (IAM), Security Command Center, and workload monitoring tools."

Responsible AI

The exam wraps up with responsible AI considerations. You'll need to explain "the importance of responsible AI and transparency" and describe "privacy considerations" including "privacy risks, data anonymization and pseudonymization."

The guide emphasizes understanding "the implications of data quality, bias, and fairness" as well as "the importance of accountability and explainability in AI systems." These aren't just nice-to-have features. They're fundamental to building AI systems that organizations can actually trust and deploy.

What Google Thinks a "Generative AI Leader" Is

Based on this exam structure, Google's vision of a Generative AI Leader becomes pretty clear. This person bridges business and technology. They don't build AI systems but understand them well enough to make smart decisions. They can spot opportunities, evaluate solutions, and guide implementation while keeping security, responsibility, and business value in mind.

The heavy emphasis on Google's specific products tells you something too. This leader should be fluent in Google's ecosystem. They know when to use Vertex AI versus Google AI Studio, understand why TPUs matter, and can explain internally why Google's tools would benefit their organization.

The Generative AI Leader exam structure, emphasizing concepts to products to techniques to strategy, mirrors how GCP thinks these leaders should approach the field. Start with understanding the basics, learn what tools are available, figure out how to optimize them, then develop comprehensive strategies.

With this exam, GCP is not focused on certifying AI experts. It's more focused on creating informed decision-makers who can navigate the gen AI landscape, make sound technology choices, and drive change in the business.