When you create a virtual machine (VM) in Google Cloud Compute Engine, you will be prompted to select network settings as part of the setup process.

The settings you choose help define how that VM can communicate with your other internal cloud resources, the outside world, and how other services or users can reach it. These settings influence everything from basic web access to load balancing and security policies.

This is part of the curriculum you need to cover in order to pass the Professional Cloud Architect exam. If you'd like to learn more, you can explore my PCA course.

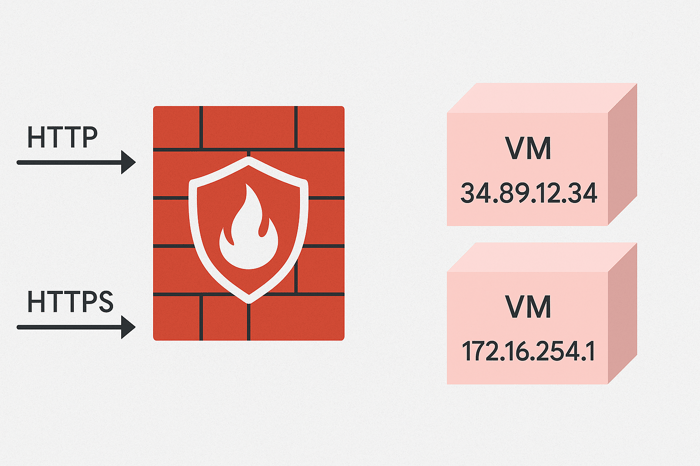

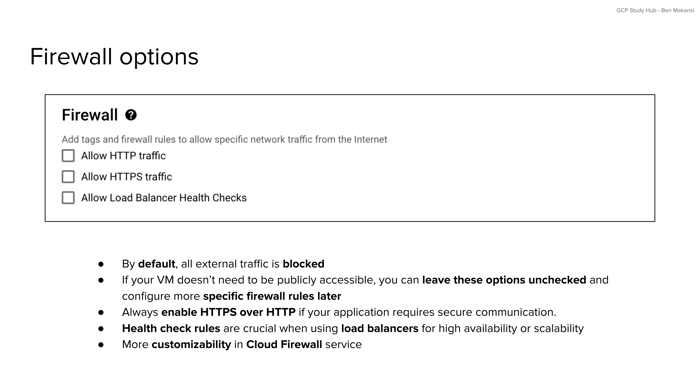

Firewall Options

By default, all external traffic to a Compute Engine VM is blocked. This default behavior protects new Compute Engine instances from unwanted exposure until you explicitly decide which types of traffic should be allowed. During the setup process, Google Cloud provides a few common firewall options that can be enabled quickly: HTTP, HTTPS, and Load Balancer Health Checks.

Allowing HTTP traffic enables port 80, which is typically used for basic, unencrypted web traffic. This might be suitable for testing or for public content that does not require confidentiality. In contrast, HTTPS traffic runs on port 443 and supports secure, encrypted communication. This is the standard for production web applications, especially when sensitive data or authentication is involved. Enabling HTTPS on a Compute Engine instance will also require you to manage SSL certificates for proper encryption.

The third option, Load Balancer Health Checks, is essential when using a load balancer to distribute traffic across multiple Compute Engine VMs. Without this setting, your load balancer may not be able to determine whether your VM is healthy and ready to receive traffic, which could lead to service disruptions.

If your VM is not meant to be publicly accessible, you can safely leave these options disabled. More customized firewall rules can always be created later using the Cloud Firewall service, which allows for fine-grained control over traffic sources, protocols, ports, and priorities. For the exam, it is important to remember that enabling HTTPS is generally preferred over HTTP, and health checks are necessary for reliable load balancing behavior.

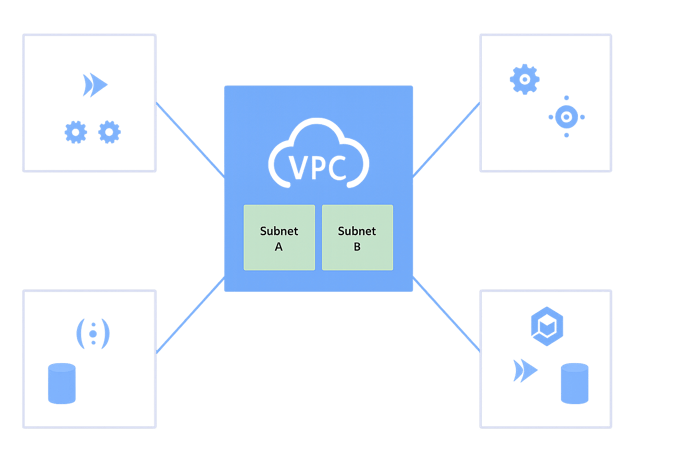

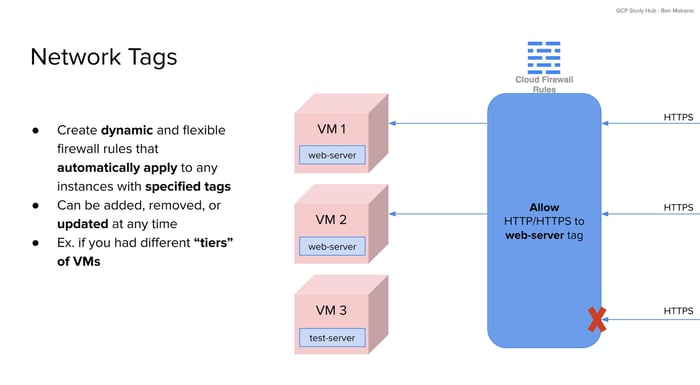

Network Tags: Applying Rules Dynamically

Network tags are one of the most powerful tools for managing firewall behavior across groups of VMs. Tags are labels that can be assigned to instances, and firewall rules can be written to apply only to instances that carry specific tags. This creates a dynamic and scalable way to control access without having to modify firewall rules every time a new VM is added or an old one is removed.

For example, if two Compute Engine VMs are tagged as “web-server,” and you define a rule that allows HTTPS traffic to that tag, both instances will accept secure web requests. If another VM is tagged as “test-server” and does not match the rule, the traffic will be blocked by the default deny policy. This tagging system allows firewall behavior to follow the role of the VM rather than its individual identity.

Tags also make it easier to manage infrastructure changes. If you want a new VM to behave like an existing one, simply assign it the same network tag. The associated firewall rules will apply automatically. This approach is especially helpful when managing applications with multiple tiers or when using auto-scaled instance groups. From an exam perspective, it’s worth understanding how tags support policy enforcement at scale without the need to reconfigure rules manually.

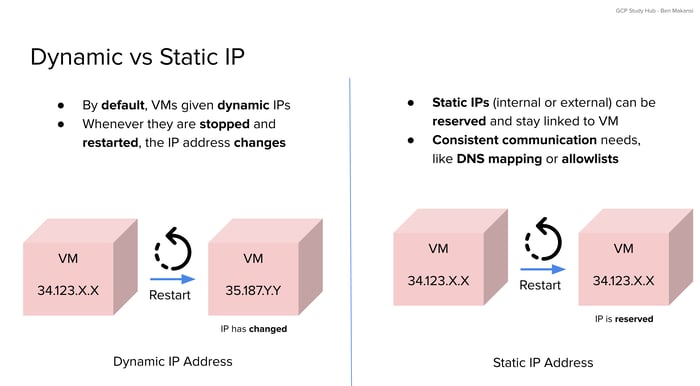

Dynamic vs Static IP Addresses

Another important decision during VM configuration is whether to use a dynamic or static external IP address. By default, VMs are assigned a dynamic IP address that changes whenever the VM is stopped and restarted. This behavior is acceptable for temporary workloads or systems that are not intended to be consistently reachable from the same address.

However, some scenarios require a fixed external IP. For example, if a domain name is mapped to your VM or if external systems use an allowlist based on IP addresses, a dynamic address would cause disruptions. Reserving a static IP ensures that the address stays the same even if the VM is rebooted or recreated. It can also be reassigned to a different VM if needed, providing flexibility along with stability.

From an architectural standpoint, static IPs are essential for services that must be continuously available at the same network endpoint. The exam may ask you to recognize when consistent addressing is required and how static IPs enable that need.

Conclusion

Firewall rules, network tags, and IP address management are core parts of deploying secure and reliable Compute Engine instances. Understanding how these settings work together helps you make informed decisions about access control, scalability, and operational consistency. For the Professional Cloud Architect exam (my full course can be accessed here), focus on when to use predefined firewall options, how tags simplify rule management, and why static IPs are necessary for consistent external communication. These concepts are highly likely to appear on the exam, and they're helpful to know for practical designing of well-governed cloud environments.